Search here

Can't find what you are looking for?

Feel free to get in touch with us for more information about our products and services.

Background

The client, a vehicle-history platform trusted by insurers, marketplaces, and dealers throughout its home region, runs on timely data pulled straight from automaker websites. Every millisecond of delay threatens real‑time quotes and insurance checks.

Behind every report the client provides is a web-scraping workflow that logs in with authorized user credentials, collects fresh vehicle records, and feeds them into the client’s API. That pipeline is invisible to end users until something falls apart.

The Task at Hand

When one major OEM suddenly blocked traffic, the client’s live API started timing out and risking SLA penalties.

Grepsr came into the rescue.

As the client’s data‑infrastructure partner, we worked to restore access fast, keep every request compliant, and leave the system stronger than before.

The entire fix went live in a few days.

We restored full access and left the client with a sturdier, policy-compliant pipeline for the long run.

Key Points

- Firewall cleared, compliance intact. Valid logins along with whitelisted IPs reopened OEM’s portal, without spoofing or brute force.

- Zero hiccups for end users. Throughout the transition, every client API call landed on time with no retries, duplicates, or visible lag.

- 99.9% data delivery accuracy. Clean results kept coming across ten high-security OEM sites, including those with the toughest anti-bot rules.

- 100% uptime post-integration. Since going live, the rebuilt pipeline hasn’t missed a single request.

- 5x faster rollout than industry norms. A proxy, IP map, and firewall setup that usually takes 10–15 days was finished in a fraction of that time. We kept the client comfortably away from disruption.

Challenges

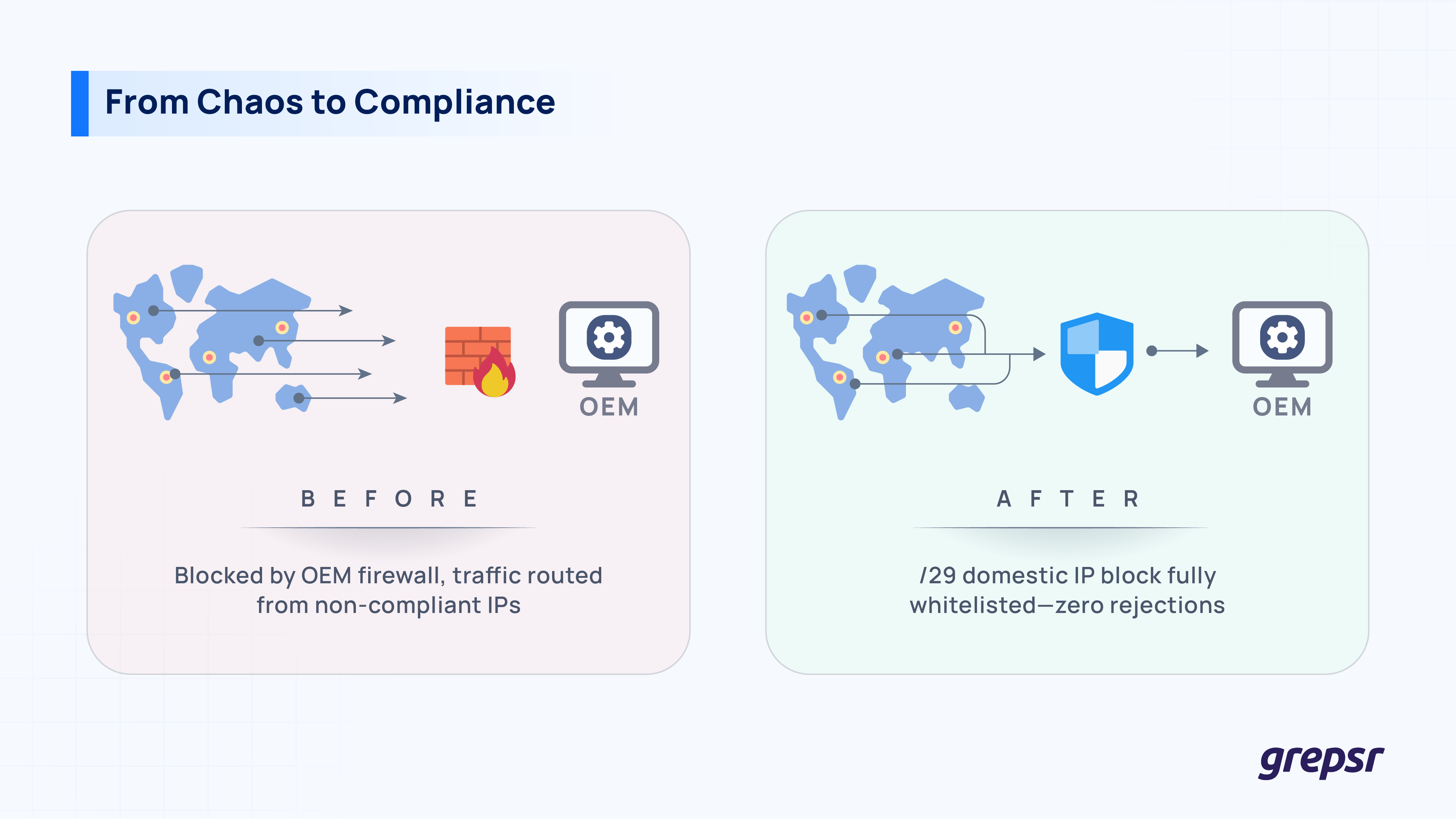

The client’s data feed was blocked overnight. One automaker’s portal detected logins coming from multiple countries at once and shut the door. To make matters worse, the Client’s previous data vendor pushed a product update at the worst possible time, which is why they reached out to Grepsr, and we’ve been in cahoots ever since.

Digging in, we uncovered a three-layer problem:

- Geographic mismatch. Their traffic exited through a rotating pool of global IPs, so to the automakers, it looked as though credentials were being shared in half a dozen countries at once.

- Proxy sprawl. The existing proxy stack couldn’t guarantee that every request would leave from the expected region. Random exits meant random blocks.

- No quick fix in-house. Their engineers are data experts, not firewall negotiators. Whitelisting static addresses inside an automaker’s security perimeter was out of scope.

The business impact was immediate and non-negotiable: if the client’s API stayed down, insurers and marketplaces couldn’t run VIN checks, dealers couldn’t quote trade-in prices, and every missed call eroded the client’s “always-on” reputation.

KPIs

Before we wrote a single line of code, we sat down with the client’s leadership and agreed on three metrics that would decide whether our work was a success or a footnote:

- 99.9% data-delivery accuracy. If a registration date or mileage record comes back wrong even once, trust wears off. Our rebuilt pipeline had to get 999 out of every 1,000 calls perfectly right, across ten high-security OEM portals.

- 100% uptime for live API calls. The client’s customers don’t schedule downtime, so neither could we.

- A rollout at five-times the industry speed. Firewall whitelisting, static IP reservation, proxy rewiring; projects like this usually drag on for 10 to 15 business days. We agreed to do it in a fifth of that time because the market wasn’t going to wait.

Our Solutions

Neutralizing the firewall block

Brute-force scraping wasn’t going to fool the automaker’s security team, and it certainly wouldn’t survive a second audit. Instead, we took the “insider” route.

First, we gathered a fresh pool of legitimate client credentials and mapped each one to an expected volume pattern. Then we routed every request through a small, static block of domestic IPs so geo-filters saw local, human-scale traffic.

Behind the scenes our proxy accepted those requests, stamped them with the pre-approved addresses, and forwarded them upstream. When the site on the other end reviewed logs, the anomaly flags vanished. Nothing looked artificial, nothing looked shared, and nothing triggered the firewall rules that had killed the client’s access in the first place.

Custom IP whitelisting

We created a /29 block in a data center, documented the range, and shipped the list to the client’s point of contact.

Once addresses were whitelisted, our traffic gained some immunity. No more sudden lockouts when credentials are cycled.

Internally, the static block simplified life too: monitoring became deterministic (one IP down equals one red light), and the support team finally had an address they could paste into status pages.

Delivering stability

To guarantee a silent handover, we built a shadow lane: production requests were cloned, routed through the new geo-specific block, and compared against live responses in real time.

We let that clone run for 48 hours. When the difference stayed at zero and the latency curve remained flat, we flipped the switch at 02:00 local time, when query volume is at its thinnest.

The result was expected. We didn’t get a single failed call, not a single duplicate fetch, and zero re-processing events. Even during the credential-sequencing learning curve, our dashboards stayed stable.

Doing it all ethically

Every part of our solution respected automakers’ published security policies. We avoided spoofing headers, bypassing captchas, or hammering retry loops.

Instead, we mirrored expected human behavior, respected rate limits, and used only authorised credentials.

While engineering ran point on routing and credentials, our customer-success squad kept the client’s stakeholders in the loop with twice-daily Slack updates and a shared status board. Any time an OEM responded to a whitelist request or changed a login flow, the client heard about it within the hour.

A note on the 5x faster promise

Compressing a two-week thing into a multi-day sprint demanded parallelism.

While one team secured the IP block, another scripted the proxy updates, and a third composed the firewall-change documentation the automakers needed for internal approval. Daily stand-ups turned into four-hour review cycles; blockers were cleared in ninety-minute windows.

Outcome

We fixed the block and strengthened the client’s pipeline in one rapid push:

- 99.9% accuracy on every vehicle-history call

- 100% uptime since cut-over

- 5x faster rollout than the usual IP-whitelisting playbook.

- Zero customer tickets and no spike in retries during or after the switch.

The client’s users kept pulling instant, reliable reports without noticing a thing.

If your own data feeds are running into firewalls, geo-locks, or stubborn IP blocks, let Grepsr clear the path and keep your service running strong.