According to Charles Babbage, one of the major inventors of computer technology, “Errors using inadequate data are much less than those using no data at all.” Babbage lived in the 19th century when the world had not yet fully realized the importance of data. At least not in the commercial sense. Had Babbage been around in the 21st century to see the giant strides computer technology has taken and to witness the preeminence of data in all walks of life, he probably would have rephrased his statement to highlight the quality aspect of data than the data itself.

The buzz of big data has been around for quite some time now — the term was first coined in 1997 by Michael Cox and David Ellsworth. It is likely that the world will continue to be perplexed and intimidated by the spell of big data until it becomes a normalized part of everyday business. There is no denying that businesses have bigger stakes with big data, however, what remains often understated in today’s business rhetoric is the quality of data.

Compounding Effects of Bad Data

According to Larry English, president of Information Impact International, Inc., “Poor information quality costs organizations 15 to 25% of operating revenue wasted in recovery from process failure and information scrap and rework.” The Data Warehousing Institute (TDWI) says “The cost of bad or ‘dirty’ data exceeds $600 billion for US businesses annually.”

A growing number of companies have now begun to report how not paying proper attention to data quality has been detrimental to their business in the long run. In fact, the real magnitude of poor data quality is not felt until its impacts begin to resurface as an aftereffect. But when the companies realize the importance of data, the harm is often irreversible.

Data is useful. High-quality, well-understood, auditable data, is priceless.

Data plays a crucial role in making the right decisions and taking the right actions at the right time, thereby improving the operational efficiency of a company. A low-quality, faulty or dirty data has compounding effects on the whole business process, leading to misguided decision making process, putting things into disarray and draining the income down into rerunning the project.

Besides, missed opportunities, disrupted customer relations, unforeseen financial liabilities and lost business etc. can have both measurable and immeasurable long-term consequences. It is usually not possible to retroactively resolve the issues that have resulted from low-quality data. This is the reason why Ted Friedman, vice president and distinguished analyst at Gartner, Inc. says, “Data is useful. High-quality, well-understood, auditable data, is priceless.”

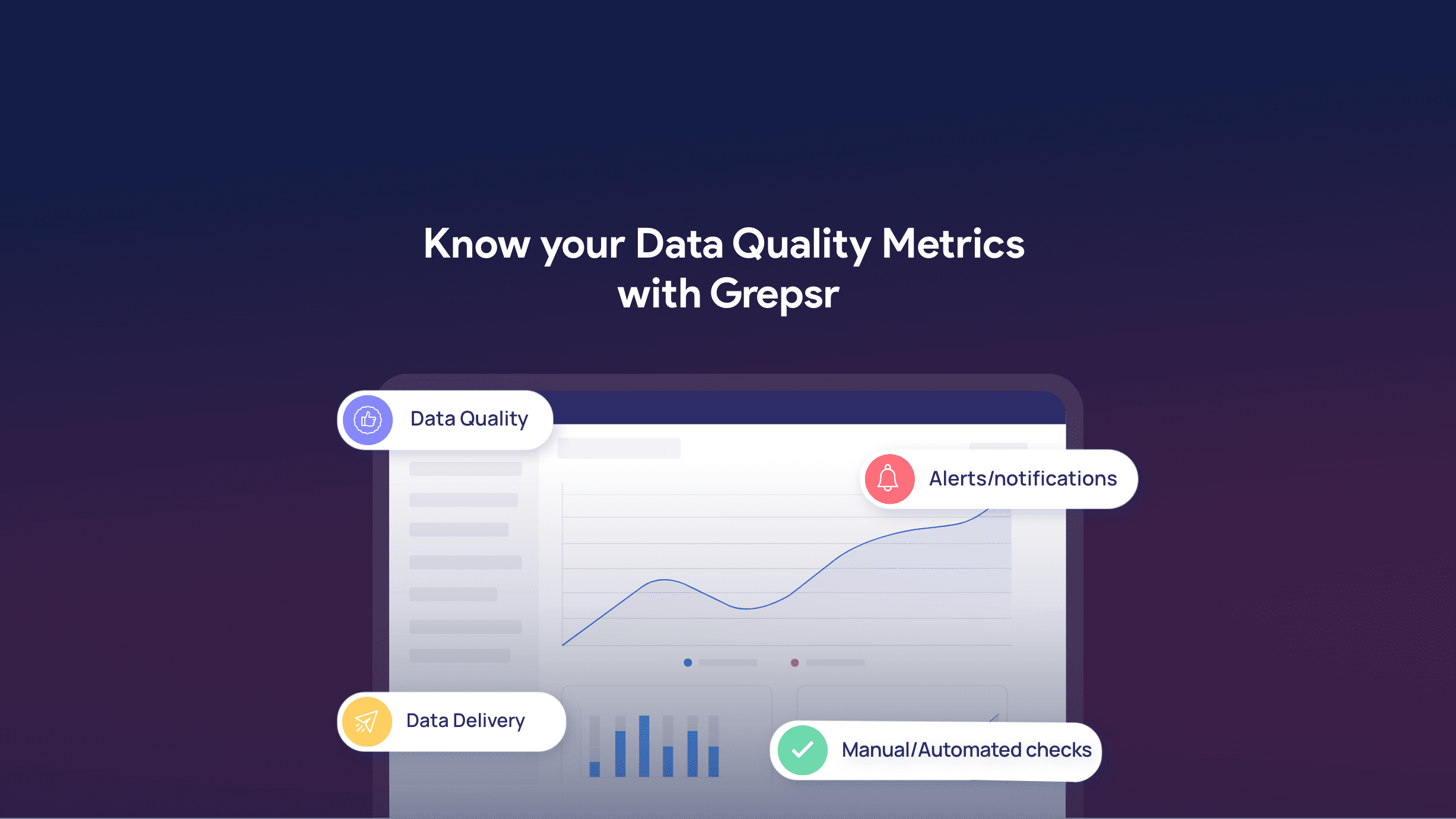

All that Matters to Grepsr is Data Quality Assessment

With the goal of maintaining higher standards of data quality, Grepsr has been running with a quality-focused setup ever since our inception. Every data specialist in our team endeavors to implement the best practice in data management and follows all the quality assessment steps to ensure that data defects are prevented from the very beginning. A focused attention in the process of parsing, cleansing, standardization and verification of data under the closely monitored quality-control system ascertains that quality of our service outcome is watertight.

From day one of its service launch, Grepsr has been specially attentive and meticulous about maintaining its data quality. We understand that, above everything else, data quality is the first precondition for expanding our market base and establishing a long-term business relation with our clients. Owing to our efforts to maintain quality, we have been making a steady progress.

Amit Chaudhary, co-founder, Grepsr

As a startup, Grepsr is fully dependent on the trust it has earned on the basis of its service quality. We have been guided by an uncompromising commitment to providing high-quality data to our customers. The fact that a growing number of big companies have been doing business with us means that we have been heading to the right direction.

Subrat Basnet, co-founder, Grepsr

Only the experienced and well-versed data experts with a higher level of data sensitivity can acquire, process and manage data in a way that ensures quality outcome. Each of the data experts in our team enjoys handling data, but each is also aware about the importance of data as a product. Because technology alone does not guarantee a higher quality of data, Grepsr relies on both technology and the resourcefulness of its data experts for maintaining quality in its services.

Quality Control Measures at Grepsr

Once the task is put into process, we take special care to prevent the factors that contribute to data inaccuracy. We work with an understanding that an incorrect data can trigger a domino effect, causing a lot of hassles downstream. Because we know the major causes of bad data — outdated or inefficient software or scripts, inadequate knowledge/skill in handling the software, time constraints with a bulky amount of data, lack of attentiveness during data entry or processing, etc. — we take step-by-step measures to avoid these causes because we know the importance of data.

While inaccuracy, duplication, inconsistency, inadequacy, conflict, obscurity and obsoleteness are the major attributes of low-quality data, completeness, consistency, accuracy, reliability, relevance, timeliness, reasonableness and proper format or structure are what best define high-quality data.

We take important procedural measures to make sure that no bad data creeps into our data management process and affects the quality and reliability of our product. Our quality control measures include:

- Identifying the set of rules that govern the content/context specific needs

- Cleansing and deduplicating data

- Carrying out data consistency assessments

- Assessing if the data stands for the real-world values it represents

- Ensuring that the data has the desired actionable information

- Verifying that the resulting data is in the desired form and structure

Related reads: