In the current era of big data, every successful business collects and analyzes vast amounts of data on a daily basis. All of their major decisions are based on the insights gathered from this analysis, for which quality data is the foundation.

One of the most important characteristics of quality data is its consistency, which is when corresponding data fields are of the same type and in a standard format across datasets. This consistency is achieved by data normalization — a process that ensures and improves company-wide success.

What is data normalization?

If you look up web scraping on Google, it is most often defined as a technology that allows you to extract web data and presents it in a structured format. Big data normalization is the process that organizes this unstructured data into a format, and makes subsequent workflows more efficient.

Generally speaking, it refers to the development of consistent and clean data. Its main goal is to reduce and eliminate redundancies and anomalies, and to organize the data so that, when done correctly, it is consistent and standardized across records and fields.

Do you need data normalization?

Simply put — yes! Any data-driven business that wants to succeed and grow, must regularly implement it in their workflow. By removing errors and anomalies, it simplifies what are usually complicated information analyses. This results in a well-oiled and functioning system full of quality, reliable and useable data.

Since data normalization makes your workflows and teams more efficient, you can dedicate more resources towards increasing your data extraction capabilities. As a result, you have more quality data entering your system and get better insights on important aspects, thus enabling you to make more low-risk, data-backed decisions. Ultimately, you see major improvements in how your company is run.

Related read:

How it works

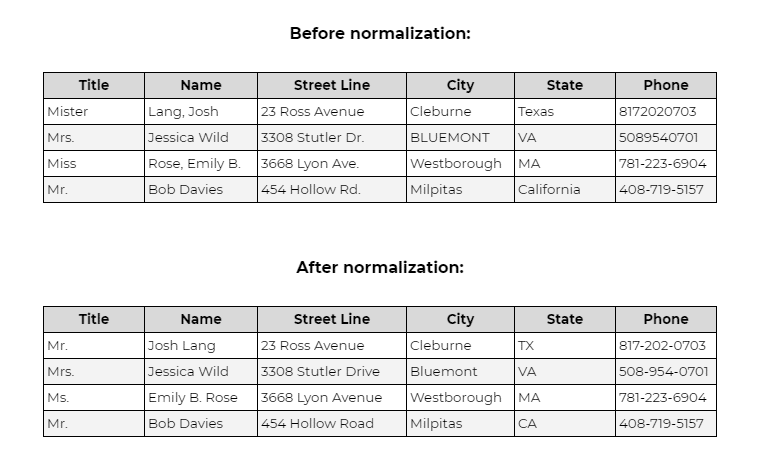

The basic idea of data normalization is to create a standard format for data fields across all datasets. Here is an example of a dataset before and after:

Apart from this basic standardization, data normalization experts have defined five “normal forms”. Each rule places each entity type into a number category based on the complexity levels.

For simplicity, we’ll look at the basics of the three most common forms — 1NF, 2NF and 3NF — in this post.

First normal form (1NF)

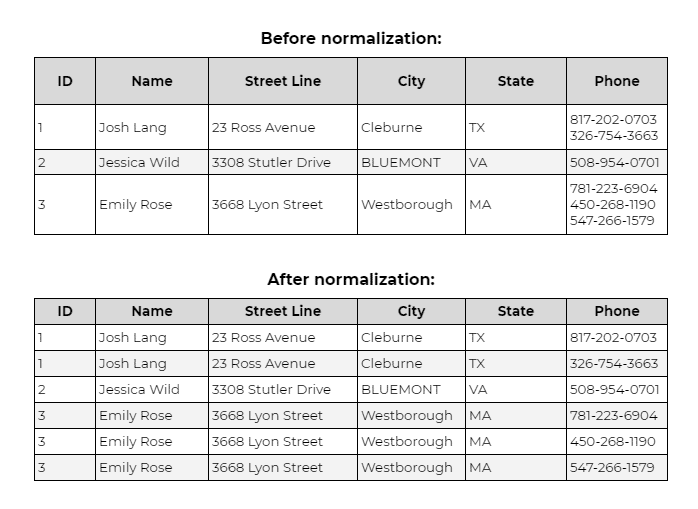

In the first normal form, each cell must have a single value and each record must be unique. This ensures there no duplicate entries.

Second normal form (2NF)

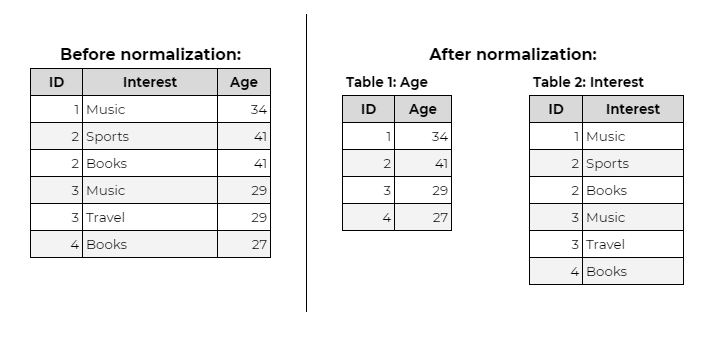

For data to fulfil the 2NF rule, it should firstly comply with all 1NF requirements. Then, it must have only ‘primary key’ (ID in the example below), for which all subsets of the data are placed in different tables. Relationships among entities can be created via ‘foreign keys’.

Third normal form (3NF)

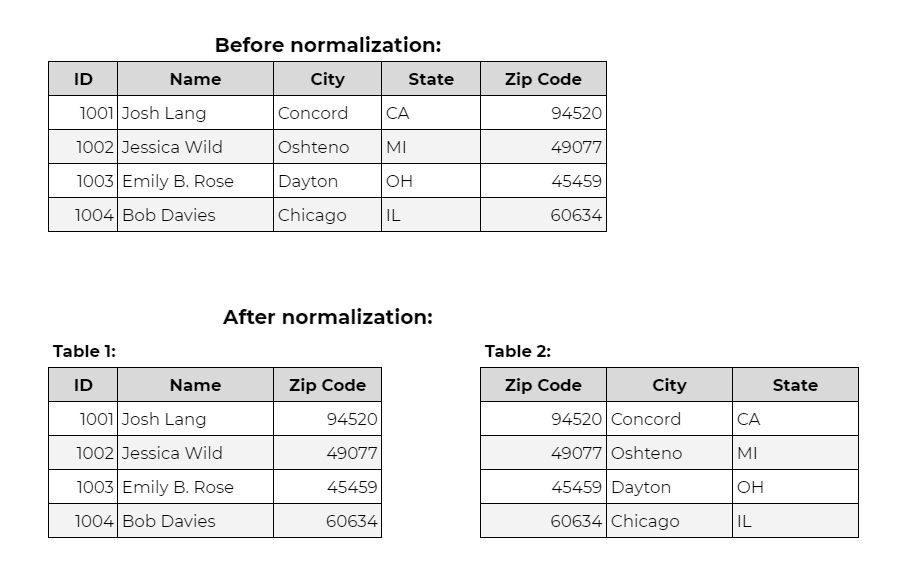

In the 3NF rule, the data should firstly satisfy all 2NF conditions. Then, it should only be dependent on the primary key (ID). If there is a case of change in the primary key. Then it involves moving all the associated and impacted data to a new table.

If you’d like a more detailed explanation of the normal forms, you can find it here.

Advantages of data normalization

In addition to the merits mentioned above, the following are some of the other major benefits of normalizing any data:

Improved consistency

With data normalization, all your data and information are stored in a single location. This reduces the possibility of inconsistent data. This in turn improves the quality of your dataset, thus strengthening your foundations, so teams can avoid unnecessary risks during decision-making.

More efficient data analysis

In databases crammed with all sorts of information, it can eliminate duplicates and organize unstructured datasets. This removes all unnecessary clutter, freeing up spaces to drastically improve processing performances.

As a result, all important systems load quicker, and run smoother and faster, allowing analysts to process and analyze more data and gain more valuable insights than ever before.

Better and faster data-driven decisions

Thanks to normalization, teams and analytical systems process more data with ever-increasing efficiency. Moreover, with already-structured data available, they spend little to no time in modifying and organizing data. Such datasets are easy to analyze, so you are able to reach more meaningful and insightful conclusions much quicker, saving valuable time and resources.

Improved lead segmentation

Lead segmentation is one of the most effective methods to grow your business, and normalization simplifies it manyfold. It is easier to segment your leads based on industries, job titles, or any other attribute. You are then able to create specific campaigns and tailor experiences based on the specific needs of each target segment.

With data becoming increasingly valuable to companies and brands worldwide, it is imperative to prioritize its quality to reap lasting benefits. Normalization ensures consistency across all of your datasets, so everyone in your organization is on the same page to move seamlessly towards achieving the common goal.

Hence, data normalization should not merely be one of the options or tools at your disposal, but one of the first processes that you employ to take your company to the next level.

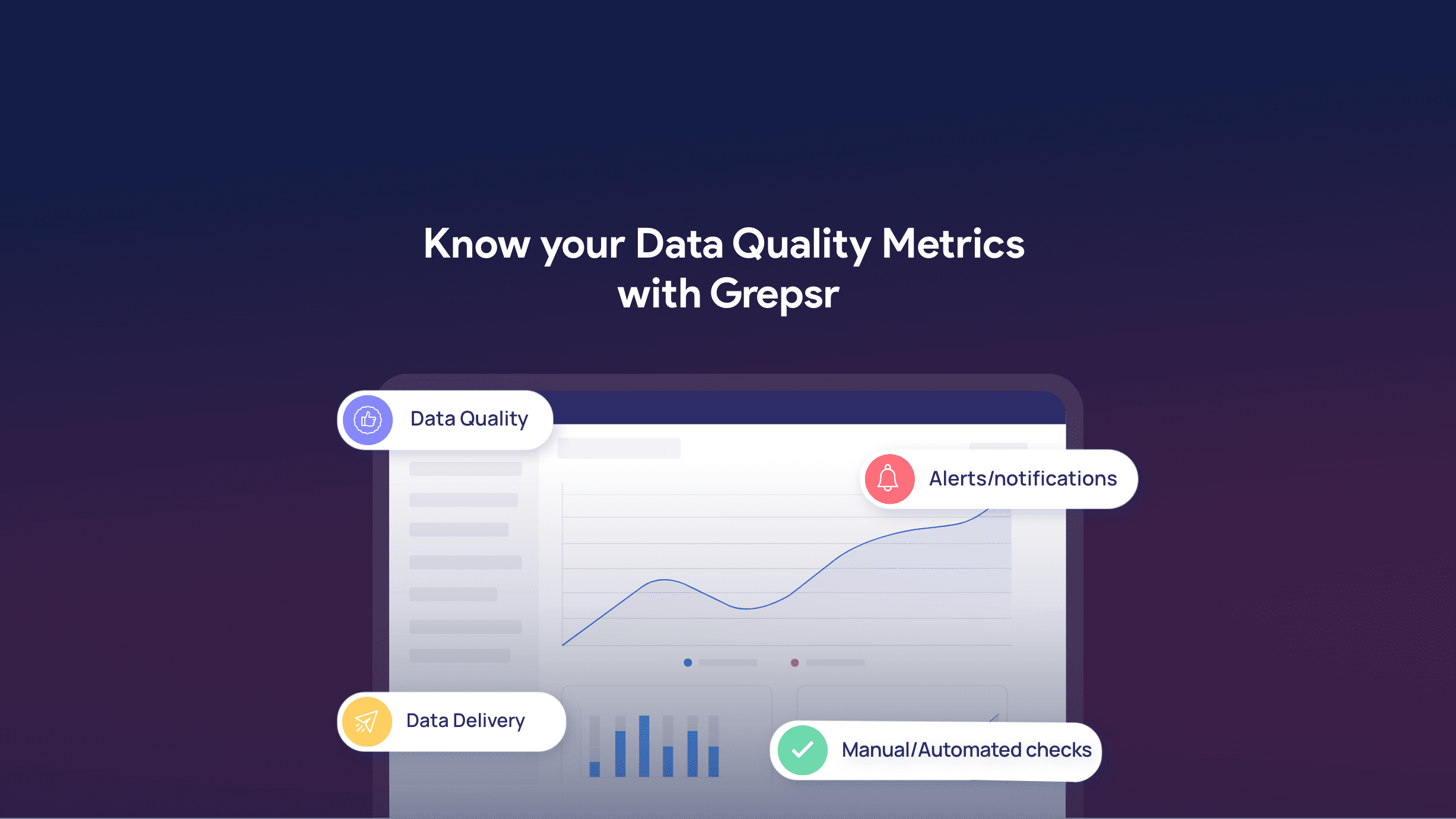

About Grepsr

At Grepsr, we always strive to provide the highest quality data to our customers. The basic aspects of data normalization — deduplication, standardization, etc. — are always the first parts of our QA process. The datasets we deliver are therefore reliable and actionable straight out-of-the-box, so you’re able to quickly gather insights and charter the path of continued success.