Choosing the right web scraping service can make or break your data strategy. The right partner ensures you get accurate, compliant, and ready-to-use data without delays or hidden costs. In this guide, we’ll walk you through the key factors to consider and show how Grepsr delivers on all of them.

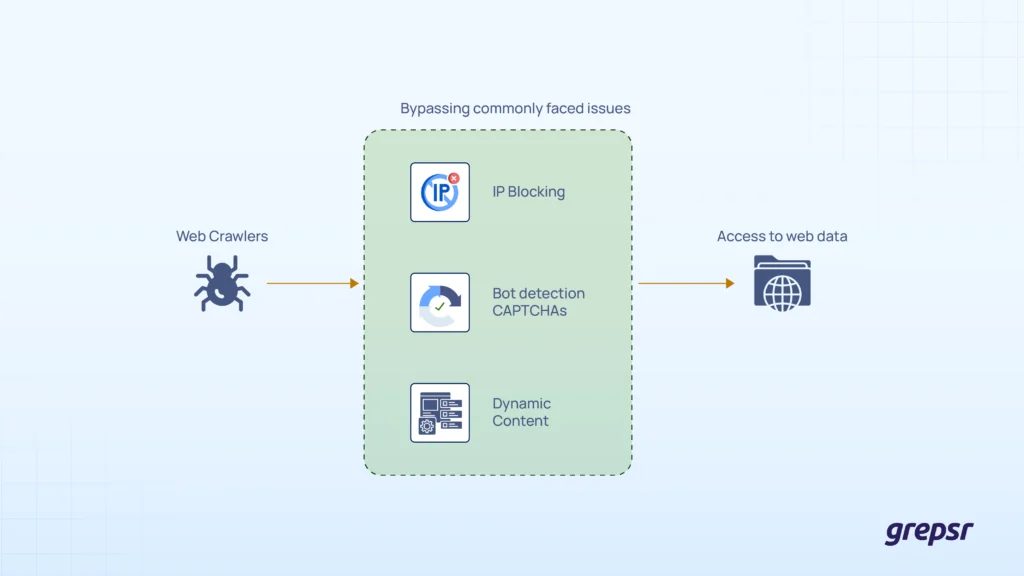

As data becomes the fuel driving smarter business decisions, web scraping is the supply chain that keeps it flowing. But doing it in-house is often the hardest route; you have to deal with roadblocks like CAPTCHA solving, authentication barriers, IP blocks, and ever-changing site structures.

To bypass these challenges, a growing number of web scraping services have entered the market. However, sifting through them to find the right fit isn’t easy. It requires asking the right questions and knowing which factors truly matter.

Understanding your data needs first

Before you even start looking for external web scraping services, first, it’s important to figure out what your data needs are.

What type of data you need depends entirely on your use case or end goal. For example, pricing intelligence requires real-time product listings, prices, and availability data from competitor websites or marketplaces.

If you’re focused on lead generation, you’ll likely need contact information and company profiles data scraped from directories, social platforms, or business sites.

Market research use cases, on the other hand, depend on broader datasets like customer reviews, social media sentiment, product metadata, or category trends to help you analyze consumer behavior and industry shifts.

Each use case requires a different type and volume of data that could be found either in a structured or unstructured format. They are pulled at different frequencies (daily, monthly, weekly, etc), and each site could have different levels of complexities for data extraction.

Key factors to consider

Let’s see what the most important factors you have to analyze to select the best web scraping service.

Tech infrastructure capabilities

The major function that your data provider must support is the ability to handle projects at scale, from thousands to millions of records, without crawler breakdowns. This means that a robust server infrastructure is indispensable for load balancing and scaling with your data needs.

When extracting data from different websites, it is common to run into bot detections like CAPTCHAs, IP bans and session-based blocks. The provider you choose must have mechanisms deployed to bypass these without compromising the consistency and reliability of data.

The extracted data is also often raw and messy. Look for providers that clean, normalize and structure the data for you in ready-for-analysis formats.

Compliance and ethics

Web scraping is legal but ignoring a site’s terms and conditions against web scraping is not. There are robots.txt files that tell whether a site has given consent to being scraped.

So, ensure that you pick an ethical data provider who respects the site-specific terms for data privacy and isn’t putting your business at legal risk.

More importantly, your provider must be aware of data protection regulations like the General Data Protection Regulation (GDPR) in the EU and the California Consumer Privacy Act (CCPA) and avoid scraping copyrighted or personally identifiable data.

Customization and flexibility

It is crucial to note that no two websites are built the same, so your web data provider has to offer tailored and custom scraping services for each target source.

Websites can keep updating their site with new information, such as seasonal pricing changes or promotional offers. This means that the external data partner must be capable enough to detect, adapt, and update their crawlers to scrape the most recent data without disruptions.

If your needs are industry-specific (eg, car rentals or real estate) then you can choose a provider that focuses on extracting data from the target sites of that particular niche. This way, you can get more credible and reliable data that meets your business needs.

Data quality and accuracy

Quality and accuracy hold the utmost importance when choosing a decent data provider. They are non-negotiable because the quality and accuracy of your data directly impact the insights you come up with and how you make crucial business decisions using them.

One must ensure that the external data provider delivers high-quality datasets with no duplicates, minimal to no missing values (unless the target website has missing values), and validated data fields.

The provider must also deploy robust QA mechanisms along with manual monitoring for data freshness and consistency. So, remember to ask for their data quality benchmarks before taking the partnership forward.

Pricing and transparency

One of the most common pain points for businesses exploring web scraping services is the lack of clarity around what you’re actually paying for. Pricing that sounds simple on the surface like a flat monthly rate of $500 often hides critical details:

- Are setup and maintenance included?

- Is there a cap on the number of websites or records?

- Does the quoted price include data delivery or just extraction?

These hidden costs can lead to unexpected charges and complicate long-term budgeting, especially as your data needs grow.

To avoid surprises, always ask for a detailed breakdown of the billing model. Understand whether you’re charged per record, per page, by volume, or via a custom pricing plan for your specific use case.

Before committing, request a proof of concept or trial run. This gives you a chance to validate data quality, accuracy, and turnaround time before signing a full contract.

With the right partner,you won’t have to deal with any hidden costs, just clean data, delivered as promised.

Turnaround time and delivery

When evaluating turnaround time and delivery, look for a provider that offers flexible scheduling options. Whether you need data on-demand, daily, or in real-time. For use cases like pricing alerts or inventory tracking, even slight delays can make data stale.

Additionally, consider how the data will be delivered. The best providers support multiple delivery methods such as APIs, Amazon S3, FTP, or email, making it easier to plug the data directly into your systems and workflows.

Customer Support and communication 24/7

24/7 customer support is critical, especially when your data workflows are time-sensitive or integrated directly into real-time analytics systems.

Even if your use case or data requirements evolve midway through the project, such as needing new fields, changing target sources, or adjusting delivery frequency. The support team should be equipped to understand those changes and relay them effectively to the technical team.

So, look for providers that offer dedicated account managers or clear communication channels, such as Slack, ticketing systems, or shared dashboards. The ability to get quick responses and status updates can make or break the success of your data operations.

How Grepsr checks all the boxes

☑️ Tech Infrastructure Capabilities = Grepsr’s Scalable Data Platform

Grepsr’s technical infrastructure, the data platform is built to scale, capable of handling millions of records processing, and thousands of crawlers running across hundreds of sources without breaking.

It supports dynamic websites with JavaScript content and overcomes anti-bot challenges like IP blocking, session limits, and CAPTCHA. We also auto-clean, normalize, and structure your data so it’s ready to plug into your systems.

☑️ Compliance & Ethics = Responsible Data Practices

Grepsr follows ethical web scraping principles, respecting robots.txt, site-specific terms, and global privacy laws. We are fully compliant with GDPR and CCPA, and avoid scraping copyrighted or sensitive data unless permitted.

☑️ Customization & Flexibility = Tailored Workflows

Every crawler is customized with site-specific logic, edge-case handling, and automatic updates when site structures change. We also support industry-specific datasets across verticals like e-commerce, healthcare, real estate, automotive, etc.

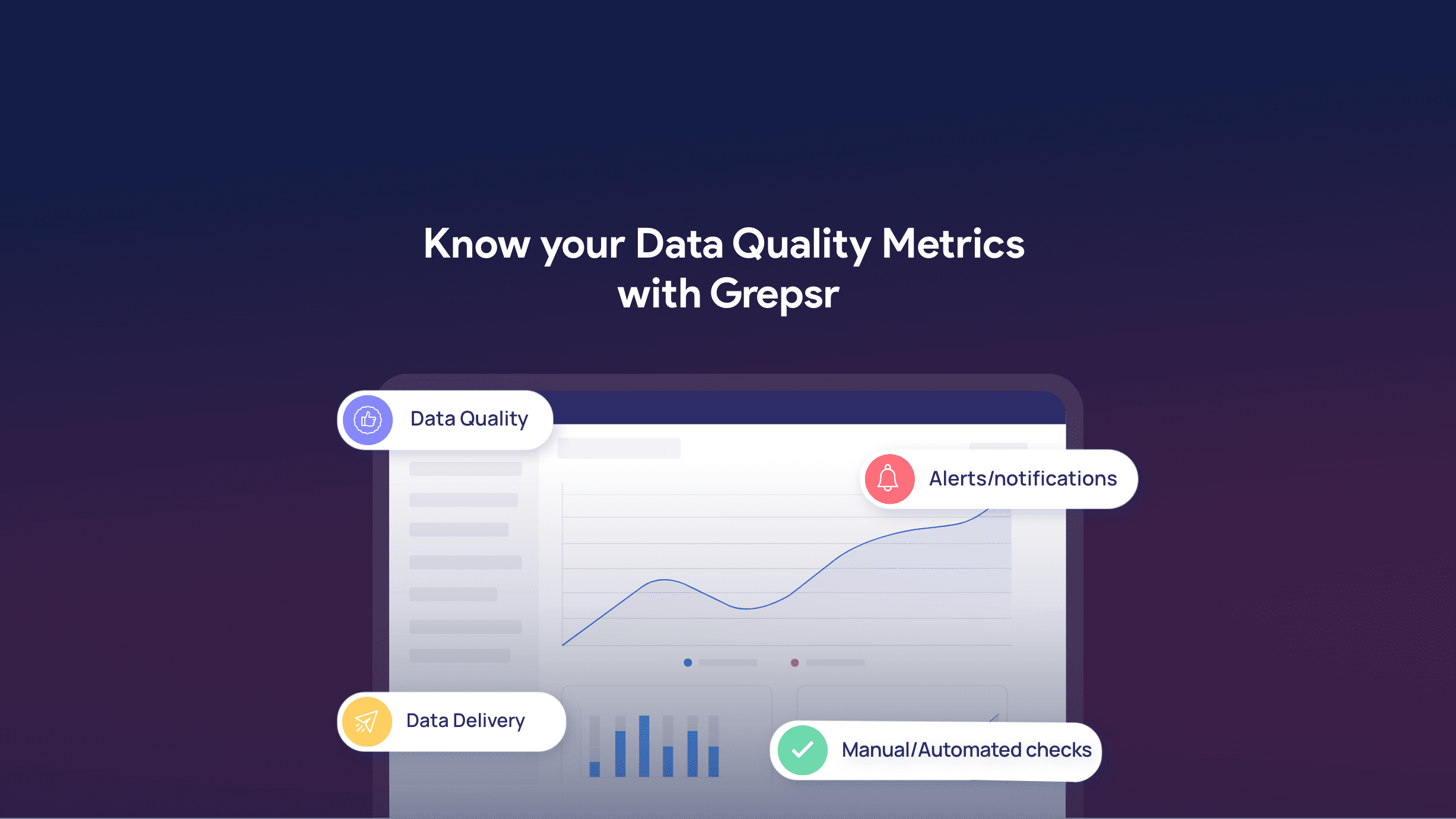

☑️ Data Quality & Accuracy = Built-in QA Mechanism and Data Quality Dashboard

Grepsr guarantees clean, deduplicated, and validated datasets. We automate QA checks in our platform for each run and our expert QAs also manually monitor fill rates, duplication, and flag anomalies in real time. Quality benchmarks can be assessed clearly through our data profile dashboard.

☑️ Pricing = Custom and Transparent Pricing Model

Grepsr offers fully transparent, usage-based pricing that includes cost per record/row of dataset, setup, maintenance, and delivery. No hidden costs! You know exactly what you’re paying for. We even offer a free POC or trial run so you can assess quality before making a long-term commitment.

☑️ Turnaround Time & Delivery = Flexible Scheduling Feature

Need daily, weekly, real-time, or on-demand scraping? Grepsr supports all. Data can be delivered via API, S3, FTP, Google Drive, or email in any format like XML, CSV, or JSON. You can schedule delivery timelines and frequency as per project requirements.

☑️ Customer Support & Communication = 24/7 across the globe

Grepsr offers 24/7 support, with dedicated success managers for each client, and quick turnaround on change requests. You can communicate through Slack, email, tickets, or the collaboration tab of our platform to track runs, monitor data delivery, and QA status.

Choosing the right web scraping service makes all the difference.

With Grepsr, you get more than just a data provider; you get a partner built for scale, speed, and precision. From compliance to customization, we take care of everything so you can focus on turning insights into action.

Consult with Grepsr to power your next data project. Start with a free proof of concept.