Web data is essential for AI, but collecting it at scale is complex. Grepsr delivers clean, compliant data to power better models.

AI breakthroughs were thought to depend on deep insights into human cognition and neural networks.

Whilst these factors are still important, data and compute resources have more recently come to the forefront.

In 2019, GPT-2 trained on 4 billion tokens—about 3 billion words. By 2023, GPT-4 trained on 13 trillion tokens—nearly 10 trillion words.

Where did all this data come from? The web.

The internet generates 402.75 million terabytes of new data every single day.

Web sourced data would seem like an ideal source of partially structured data to train AI models.

However, accessing web data isn’t without challenges.

Many websites use anti-bot technologies like IP blocking, rate limiting, and Captchas, making large-scale extraction difficult and time-consuming.

And there’s the grey area—while the data is publicly available, scraping it may still run into compliance risks.

At Grepsr, we practice responsible web scraping, ensuring that we comply with legal standards and ethical considerations.

Our ISO 27001:2013 certification means we meet high standards for data security and governance.

We also take steps to prevent overburdening websites by employing automated throttling and load balancing.

Most importantly, we never scrape personally identifiable information, ensuring we stay on the right side of privacy laws.

AI teams should focus on model development, not on bypassing anti-bot measures or dealing with compliance risks.

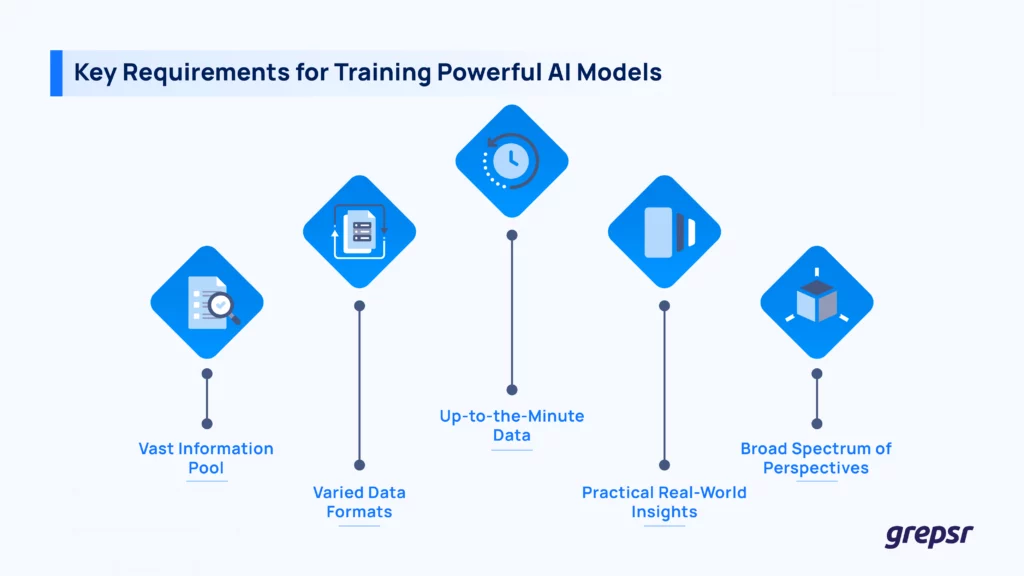

Immense Information

The web is a mix of diverse data, ranging from news articles on the BBC to discussions on Reddit.

Training AI models on this wide range of information helps the model generate more accurate and relevant responses.

Diverse Data Types

The web also contains various content types, including text, images, videos, and conversational exchanges.

This diversity helps AI models learn a broad range of linguistic styles, cultural contexts, and situational nuances, allowing them to generate more natural and contextually aware responses.

Real-Time Data

Websites are constantly refreshed to remain competitive and keep their audience engaged.

By training AI models with this up-to-date content, the models stay relevant and responsive to the latest trends, news, and information, improving their accuracy and ability to address current issues.

Real-World Context

Web data reflects real-world scenarios, making it essential for AI models to produce outputs that are grounded in everyday contexts.

Training with web data helps models understand how people use language in practical, real-world situations, ensuring the AI’s responses are not just theoretically sound but also practically applicable.

Diversity of Perspectives

The internet provides access to a wide array of voices, opinions, and experiences, helping to counter the risk of biased or narrow AI outputs.

By training AI on data drawn from multiple regions, cultures, and demographics, the model can provide more inclusive, balanced, and fair responses, reducing the risk of perpetuating stereotypes or reinforcing existing biases.

Why AI Models Need Accurate Web Data

Recently Elon Musk claimed that AI has already consumed all human-produced data to train itself and must now rely on synthetic data.

The integrity of an AI model depends entirely on the data it’s fed. Labeled datasets are used to train the model, and task-specific datasets fine-tune it.

However, if the content of the training datasets is biased or lacking in diversity, the AI model will inevitably produce biased or inaccurate outputs.

The latter is also true if the datasets are lacking in accuracy.

It’s the same old rule of thumb—Garbage In, Garbage Out.

So it would seem that solely extracting data from the web is only part of the solution, datasets must be complete and accurate.

Grepsr not only collects data at scale but is able to perform data preparation tasks to ensure it is ready for the next stage.

Collecting Web Data is Challenging

1. Anti-Scraping Measures Make Data Extraction Difficult

Many websites actively block bots using CAPTCHAs, IP bans, and bot-detection systems.

These defenses are becoming more sophisticated, making it harder for in-house teams to maintain uninterrupted data collection.

Grepsr uses a combination of proxy management, browser-based scraping, and automated CAPTCHA handling to bypass restrictions and ensure continuous data access.

2. Dynamic Websites Require Advanced Scraping Techniques

Modern websites use JavaScript rendering, infinite scrolling, and AJAX-based content loading, which standard scrapers often fail to handle.

Without proper techniques, large portions of data remain inaccessible.

Grepsr uses headless browsers and automated interaction techniques to extract data from dynamic websites, ensuring all relevant information is captured.

3. Scaling Scraping Operations is Resource-Intensive

Scraping a few pages is simple, but extracting millions of data points regularly requires a robust infrastructure.

Proxy management, crawler maintenance, and server costs can quickly add up.

Grepsr’s cloud-based infrastructure allows for scalable data extraction without requiring additional in-house resources, making large-scale web data collection more efficient.

4. Data Quality Can Be Inconsistent

Raw scraped data often contains duplicates, missing fields, and inconsistencies that can affect AI models and business insights.

Cleaning and structuring large datasets manually is time-consuming.

Grepsr applies automated and manual quality checks to filter, clean, and structure data before delivery, ensuring accuracy and consistency.

Our automated pipelines extract, clean, and enrich large-scale datasets, delivering structured, AI-ready data—so your team can focus on model development instead of data wrangling.

Plus, our data management platform gives you full visibility into the crawler lifecycle, data quality, and key performance metrics, ensuring your datasets remain accurate, compliant, and scalable.

5. Legal and Compliance Issues

Data privacy laws like GDPR and CCPA, along with website terms of service, make web scraping legally complex.

Without proper safeguards, businesses may face compliance risks.

Grepsr follows ethical scraping practices and ensures compliance with legal requirements, reducing the risk of regulatory issues.

Reliable Web Data is the Foundation of Smarter AI

AI models are only as good as the data they train on—but acquiring that data at scale is one of the biggest hurdles AI companies face.

With web data you can bridge the gap between AI’s potential and the real-world knowledge it needs to learn.

Without clean, structured, and well-labeled datasets, AI systems risk inefficiencies, bias, and costly retraining cycles.

The need for cost effective, high-quality, scalable, and ethically sourced web data has never been greater.

Grepsr stands ready to assist in your next phase of data growth.