Did you know that in a world drowning in information, making sense of raw data from the internet is like finding a needle in a haystack? However, looking at the silver lining, the dynamic duo – ETL and web scraping can unravel the chaos of unlimited, unstructured data into clarity and make sense.

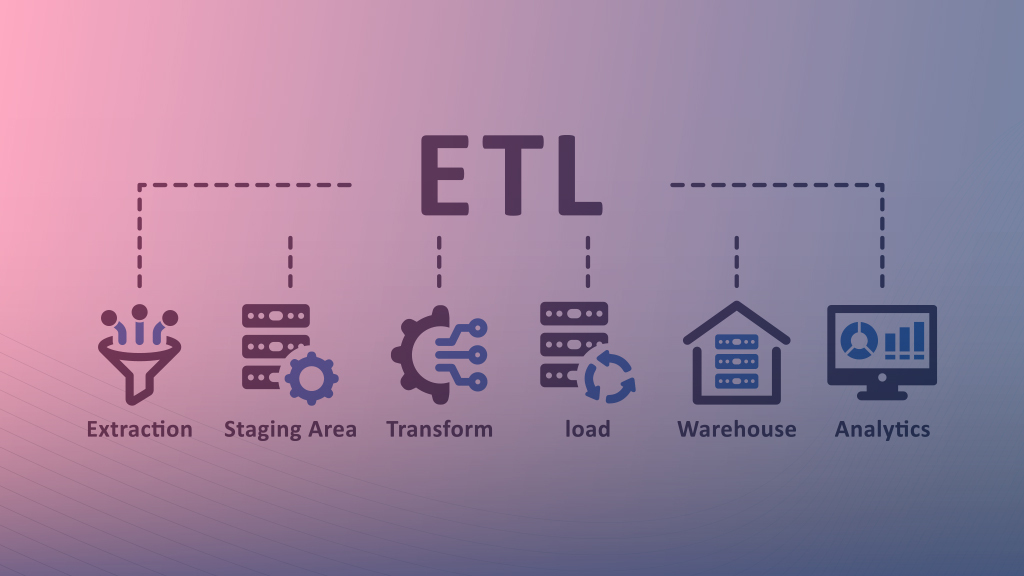

ETL is short for extract, transform, and load which is a data integration process. Just like its name, data from multiple sources is extracted, transformed, and loaded into a system.

Now, let’s delve into its synergy with web scraping.

Web scraping is the process of collecting actionable data from websites and web pages for analysis that promotes data-driven initiatives.

The web scraping process revolves around the extraction, transformation, and loading of data from websites to readable files.

Thus, ETL is an essential step in web scraping to seamlessly gather and organize data from the vast expanse of the web.

Understanding ETL in Simple Terms

To understand extraction, transformation, and loading more comprehensively, we will give you a simple toy box analogy.

Extract: As simple as it sounds, extract refers to grabbing information. Suppose you have a huge box full of toy cars. Picking out specific cars from that box is extracting.

Transform: Transforming is changing or organizing the toys differently from the way they originally were. Let’s say you want all of your car toys to be arranged as per their colors. So the red cars are together, the blue ones are together, and so on.

Load: Eventually, after arranging the car toys into sorted colors, you place them into separate bins where they are in their designated places.

Now that you understand this, it is quite similar in the case of web scraping.

Extract: Just like the example of toy cars, imagine certain information from a website. Extracting in web scraping is collecting data from a website, let’s say getting all the product titles of perfumes from the Amazon search result page.

Transform: After collecting all the titles, you want to organize them alphabetically. Now transforming in web scraping is changing the format or structure of the data. So in this case, the collected data is arranged in an alphabetical order structure.

Load: Finally, the transformed data has to be kept somewhere where we can find it when necessary. Loading in web scraping is storing the organized data file in a place for later use such as analysis.

Thus, ETL data scraping is retrieving data from a certain webpage, sorting the data in the required format, and storing it so that you can access it easily and use it for decision-making.

Web Scraping: The Digital Extraction

Web scraping is a solution that automatically navigates websites and extracts relevant data at scale. The data is stored in a preferred type of file and used for application and making informed decisions.

The process initiates with an HTTP request to a desired website and from that you can extract the HTML content of the specific web page.

The HTML content is now parsed out (meaning to split a file or a dataset so that it is easy to manipulate and store) to store the exact required elements.

Then after you parse and analyze the data, the extracted data fields are stored for further analysis and integration in various formats like CSV, JSON, or Excel.

These datasets have various use cases to uncover market dynamics.

Price Monitoring

Web scraping is a great help in price monitoring. As a retailer in an e-commerce platform like Amazon, it is fairly impossible to visit thousands of your competitor’s product pricing and manually monitor changes.

Furthermore, with a web scraping service, you can extract data from pricing details like current prices, listed prices, discounts, and savings percentages.

The extract, transform, and load process is implemented here. First when fetching the relevant data fields from the platform. Next, curating and cleaning it to ensure consistency and accuracy. Finally, loading it to a database or scheduling it to run at specific intervals.

Efficient Resource Allocation

From the point of interest (POI) or geographical data insights, you can make better decisions for your company by efficient resource allocation.

Suppose you’re an online retailer who has run out of stock for refrigerators. Now, you must know the exact time when the route is comparatively less busy and it is easier to deliver the inventory conveniently and at a fixed time.

The ETL process extracts geospatial data, categorizes it by busy and crowded times, and presents it for analysis and decision-making. This helps with inventory management and customer satisfaction.

Market Research

Web scraping is essential for conducting market research for any industry. You can extract data from competitor websites, industry forums, social media platforms, review sites, and more. The way to access data legally is by gathering only publicly available information.

The data points are transformed by removing duplicate values, fixing errors, and validating with accuracy.

For market research, analyze data on market conditions, competitor strategies, performance, and customer preferences to gain useful insights. With this, you can implement data-driven strategies and decisions for your business evolution.

Lead Generation

Web scraping helps businesses generate leads for sales and find candidates for recruitment at various levels. You can improve lead generation by using web scraping to access high-quality lead databases.

The lead’s information, such as name, position, education, and career, is converted into JSON, CSV, or Excel format. You can approach the lead with a proper strategy so that they can convert into a customer.

However, there is a high probability that it might raise issues for privacy concerns. This is because it is natural for people to be cautious about their private contact information being extracted from platforms like LinkedIn.

Thus, to maintain the safety and privacy of people, only the publicly available data can be collected by web scraping so that their contact is not misused for spamming and harassment.

End Note

In conclusion, the synergy between ETL (Extract, Transform, Load) data processes and web scraping unveils a powerful toolkit for navigating the vast sea of information on the internet.

From initiating HTTP requests to parsing HTML content and storing data in various formats like CSV, JSON, or Excel, web scraping facilitates the conversion of raw information into actionable insights.

Supercharge your data strategy with Grepsr’s decade-long expertise in web scraping! Tailor our solutions to match your evolving business needs. Unlock the power of actionable data and start scripting your data-driven success story today. Don’t just adapt—thrive with Grepsr.