What comes to your mind when I say think of a detective?

A sharp mind, a piercing gaze that misses nothing, a sharp long nose, a smoke pipe always resting in his mouth, and a relentless pursuit of truth.

A man who stands out for his outstanding investigation skills.

Yes, you’re right. It’s Sherlock Holmes!

Holmes earns glory for his ability to solve intricate mysteries using his analytical mind and extensive research.

Now, what if I tell you that (Robotic Process Automation) RPA is the Sherlock Holmes of the digital landscape?

Let me explain how.

Just like Sherlock Holmes with his keen eye for detail, meticulously sifting through seemingly random clues, RPA excels at examining vast amounts of web data.

It can identify hidden patterns, and inconsistencies in data, just like a detective uncovering subtle clues that point to a larger truth.

Similar to how Holmes wouldn’t waste time on menial tasks, RPA automates repetitive actions like clicking and scrolling through web pages, freeing you to focus on the bigger picture – the valuable insights hidden within the data.

Just like Sherlock Holmes navigating the bustling streets of London, RPA bots traverse complex website structures.

These structures, often designed for human interaction, require actions like scrolling and clicking – a task RPA executes flawlessly by mimicking human behavior through GUI elements.

Think of it as RPA’s way of tailing the right digital suspects. Finally, once the relevant data is identified, these digital detectives meticulously extract it, storing it for further analysis – the key to unlocking valuable insights and forming strategic business decisions.

Learn more about how RPA works for automated web scraping:

What are the common challenges in Web Scraping?

From the overabundance of raw, unstructured data,- extracting what’s important in a structured format from public web sources is known as web scraping.

Having said that, it is inevitable to come across common challenges during the data scraping process.

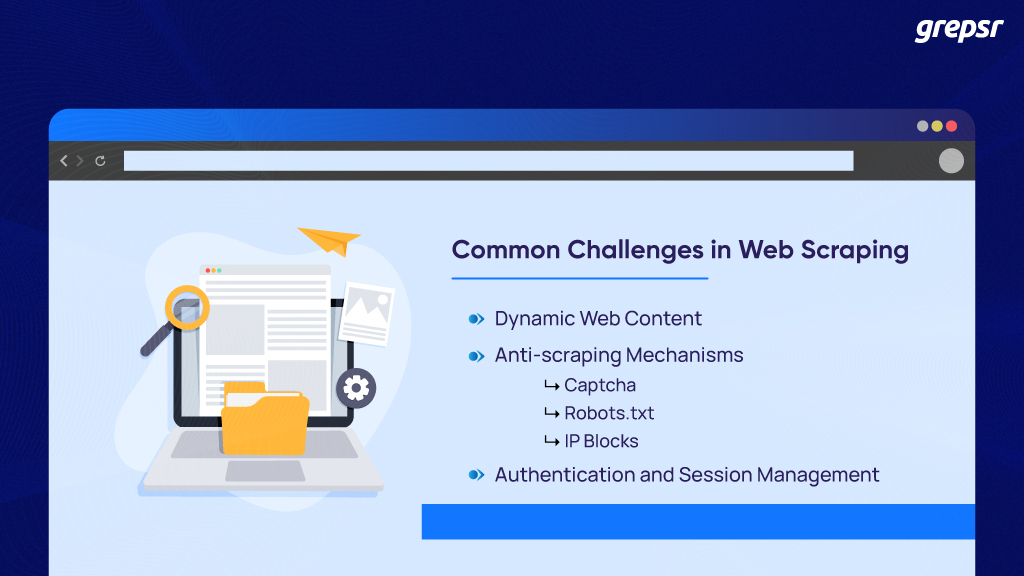

Dynamic Web content

When browsing the web, you encounter two kinds of pages.

One that displays static content to all visitors and another with dynamic content personalized to each individual.

Consider Amazon where each time you log in, the page is tailored with historical data and your recent searches.

Most of the time, they rely on user actions like scrolling, clicking, and going to the next page.

This is challenging for traditional web scraping for accurate and consistent data extraction since they are programmed to scrape static HTML elements.

Anti-Scraping Measures

Rigorous anti-scraping mechanisms are adopted by websites to prevent spam and abuse.

They are smart enough to detect the presence of bots and block their robotic feats that could disrupt the server.

Some of the measures that prevent spiders (web crawlers) are:

- CAPTCHA: This is the most common, popular, and effective system for website security.

The old “I’m not a robot ” checkbox, choosing images with traffic lights, configuring distorted text phrases, etc.

These are the gatekeepers that single out robotic acts by performing a risk analysis on the user.

Once they discover any suspicious activity, CAPTCHA restricts your access to the website and ensures that the information is secure.

- Robots.txt: Robots.txt files let the crawlers know which are the URLs that they can access within a particular website site.

It states the crawl limit to avoid overloading the site with requests that cause network congestion. So before beginning to run the scraper bots, the robots.txt files, the site’s terms of service, and privacy policy are crucial elements you need to check beforehand.

If you are wondering why, it’s because they are the ones that allow or deny access to the specific contents you need to scrape from the URLs.

- IP Blocking: IP blocking techniques or IP bans are mechanisms used by websites to identify the IP addresses of where the requests come from.

When it figures that the same IP address is frequently used to make requests, it concludes that it’s a web scraper with malicious intent trying to access and extract their protected data.

Thus, if you fall prey to this hunt, you are either temporarily or permanently banned from entering that website, let alone scraping their data.

Authentication & Session Management

Many websites require login credentials and want you to accept session cookies to browse their content. You have probably asked yourself why.

The simple reason why they do this is because they want to remember you. They are there to enhance your online experience with personalization and convenient website visits.

As a user surfing the web for answers and solutions for particular queries, it is the coolest thing ever.

Whereas in web scraping, this authentication and session management procedure is a cumbersome barrier to efficiency.

Filling login forms with valid credentials for successful authentication just to reach the needed content for each session can complicate the scraping process.

Making HTTP requests for the same content multiple times further worsens the issue. Thus, prolonging data extraction efforts and resulting in counterproductivity.

There are many other pains like honeypot traps, browser fingerprinting, website structure changes, scalability, and judicial issues. Although all of them cannot be addressed and solved with ease, RPA does help with overcoming some of these challenges.

Few solutions to overcome them with RPA

Integrating RPA technology is groundbreaking in web scraping as it deploys software robots that perform tasks that need human assistance. The data extraction process is more seamless than ever with little to no disruption.

Handling Dynamic Web Pages with RPA

The dynamic web page content as mentioned above requires user interaction with the interface like scrolling, and clicking the “show more”, and “go to next page” buttons.

These GUI elements are a hassle to deal with as they delay the data extraction process.

But with robotic process automation, the programmed bots can handle the elements by interacting with them like a genuine user.

They use techniques like XPath, CSS selectors, or element attributes to allow bots to locate the dynamic elements.

Plus, you can integrate headless browsers like Puppeteer to render and execute JavaScript on the web page. This way bots can scrape data from the dynamically generated content of sites that mandate user interaction.

Thus, web scraping dynamic web pages is easier with RPA bots.

Overcoming Captcha and Anti-scraping Mechanisms

Multiple free and paid third-party services exist that can help you with CAPTCHA-solving. RPA bots can submit such CAPTCHA requests to those services with the help of APIs.

Another solution is simulating human-like browsing behavior such as randomized delays between requests. Throttling the scraping by limiting the number of requests per minute can be included to avoid triggering the rate limit and anti-bot securities.

Similarly, the bots rotate IP addresses using proxies to avoid IP blocks. Additionally, this portrays the diverse browsing behaviors of users and lessens the risk of detection.

Thus, to overcome anti-scraping mechanisms, RPA combines proxy rotation, randomized delays, request throttling, and Captcha-solving services.

Managing Authentication and Session Handling

As said before, you can imagine how filling out forms and logging in to assess the website each time a request is made is inefficient for data collection.

Nevertheless, RPA bots are there to save the day by automating the login process by entering credentials into website forms.

They seamlessly interact with GUI elements like text fields and buttons where a user has to enter their details (mostly username and password) and submit them for COMPLETE access to the website (yes even the restricted areas of the site).

Once the authentication process is over, it’s time to manage session cookies.

Bots ensure that those session cookies are captured and maintained throughout the automated scraping process.

They also automatically re-authenticate, preventing the condition of session expiration, and have continuous access until the data extraction process is complete.

Thus, you need not stress about authentication failures because RPA bots implement robust error handling and recovery mechanisms for authentication as well as session time.

To Wrap Up

Just like Sherlock Holmes wouldn’t leave a case half-solved, RPA ensures all the valuable data is meticulously extracted.

By automating web scraping tasks, RPA frees you, to focus on the real mysteries – interpreting the data and making strategic decisions.

So, put on your deerstalker hat, fire up your RPA tools, and consider Grepsr as your trusted Watson.

With its expertise in RPA-integrated web scraping, Grepsr can be the missing piece you need to crack the case of hidden insights within the vast web. Get ready to lead your business to the trail of success!