Great models start with great data. If your team relies on AI training data web scraping, the way you plan, collect, and prepare that data determines how well your models perform.

This guide shows a simple path from clear objectives to clean, training-ready datasets—covering machine learning dataset collection, data acquisition for AI, and practical prep for LLM training data.

Start with a clear objective.

Write one sentence that states the task and the signals you need. For example, “classify product reviews by sentiment and topic using review text, rating, category, brand, and time.” Simple success measures (coverage, duplicate ceiling, freshness) keep everyone aligned and make trade-offs easier later.

Pick the right sources.

Choose sources that actually contain the signals you need. Public datasets help you start fast. Domain websites provide fresh, granular details. Official portals offer ground truth. Internal systems add outcomes and labels. If you use AI training data web scraping, confirm you’re allowed to access the site and respect the site’s robots.txt file and terms of service. Keep provenance (source URL and capture time) for every record.

Explore how we structure compliant, large-scale programs in Grepsr Services and see results in Customer Stories.

Design the pipeline around the schema.

Define fields, types, ranges, and “required vs optional” up front. This schema becomes the contract across machine learning dataset collection, cleaning, labeling, and training. Start with a small, diverse sample to reveal edge cases early. Version your crawlers, schema, and outputs so you can reproduce any run.

Collect responsibly (and efficiently)

Good data acquisition for AI balances speed with respect for sites and privacy. Limit the scope to what the objective needs. Rate-limit politely. If personal data could appear, apply minimization and clear handling rules. Track costs per record so you notice expensive sources before they balloon.

Turn raw captures into training-ready data.

Most work happens after collection. Keep it repeatable and light on surprises.

Cleaning. Remove duplicates with stable keys, standardize dates and currencies, and decide when to drop vs impute missing values.

Structuring. Conform to the schema and log any violations for quick fixes.

Splits. For time-sensitive problems, use time-aware splits so evaluation mirrors production.

Validation. Profile datasets and watch for anomalies and drift. A good primer is TensorFlow Data Validation.

Labeling and enrichment

Treat labeling as a pipeline, not a one-off task. Write short guidelines with examples. Where safe, start with simple programmatic rules to create draft labels, then audit a stratified sample. Join helpful external signals (like location or category taxonomies) to make features stronger—especially for LLM training data, where quality and diversity matter more than raw volume.

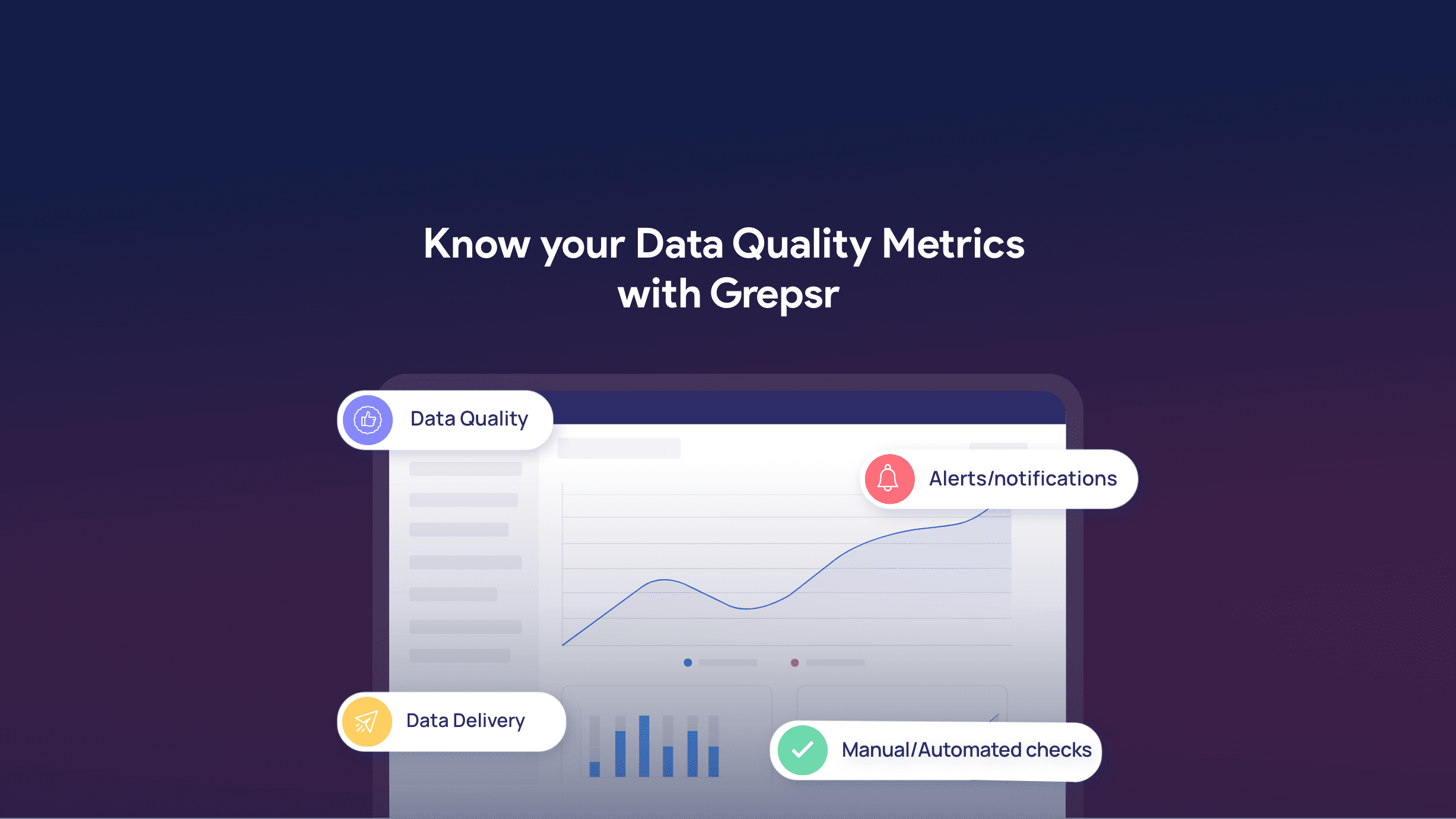

Guardrails that scale

As volume grows, add clear guardrails:

- Fail a run if duplicate or null rates cross a threshold.

- Watch distribution drift between training and serving.

- Keep a brief dataset card (purpose, sources, licenses, fields, known gaps) so downstream teams use data correctly.

Example: rental market analysis using web scraping

A housing team wants weekly insights across cities. They scrape listing pages, store raw HTML and extracted entities, and capture provenance for audit. The schema covers price, bedrooms, property type, geo, and capture time.

Cleaning standardizes currency and area units; simple rules tag “near transit” or “new build,” then a human audits a sample. Time-aware splits keep the latest week for testing so the evaluation matches reality. This is a compact, reliable path from AI training data web scraping to trustworthy signals.

How Grepsr fits

Grepsr manages collection at scale, aligns outputs to your schema, and delivers data to your lake, warehouse, or lakehouse with dedupe and anomaly flags built in. If you need machine learning dataset collection for a new use case or want to harden data acquisition for AI, we can help you ship faster with fewer surprises.

Closing thoughts

Data quality drives model quality. When you plan the dataset like a product, collect responsibly, and prepare it with clear rules, your LLM training data and ML datasets stay reliable as you scale. If you want a partner to handle the heavy lifting while your team focuses on modeling, Grepsr is ready.

Ready to streamline data acquisition and preparation? Talk to Grepsr

FAQs

1. What makes high-quality AI data?

It fits the task, is consistent, has clear provenance, and passes checks for duplicates, missingness, and drift.

2. Is web scraping allowed for AI training?

It depends on site terms and local law. Follow robots’ guidance and privacy rules, and avoid restricted sources.

3. How should I split data for time-sensitive use cases?

Use time-aware splits and keep the most recent window for testing so results mirror production.

4. Where do internal links go?

We included Grepsr Services, Customer Stories, and a relevant quality guide for easy navigation.